Augmentir’s 6 Laws of AI Agents define the guardrails for safe, ethical, and accountable agents in manufacturing and industrial environments.

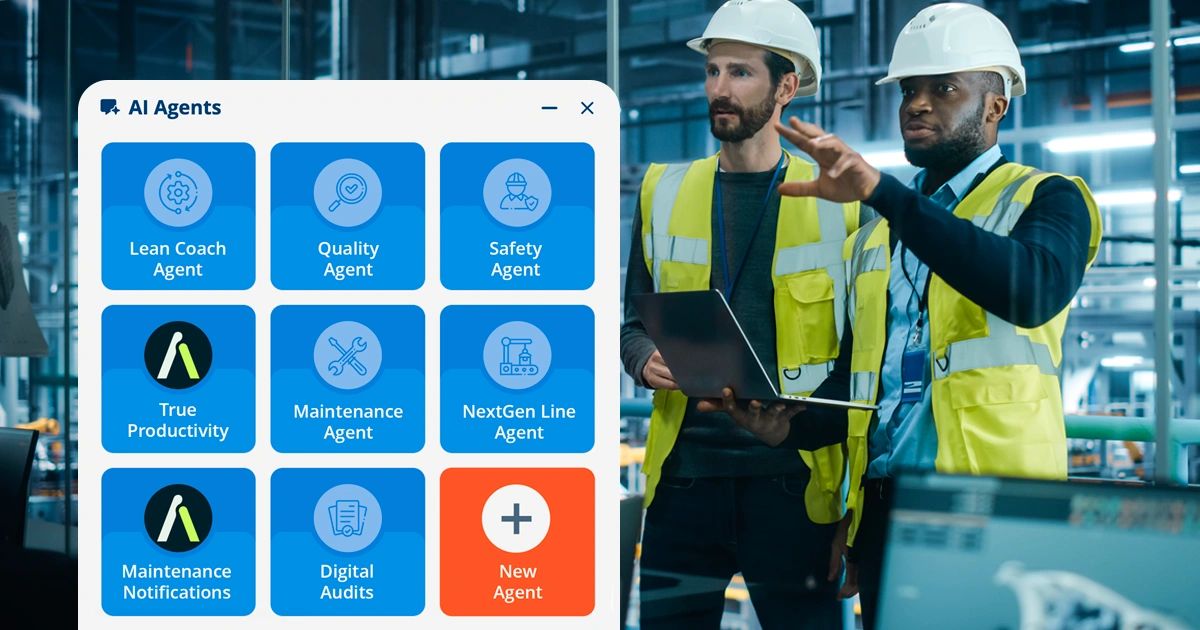

As AI agents become more deeply embedded in business operations, they carry tremendous potential—but also significant responsibility. At Augmentir, we believe that trust, accountability, and safety must form the foundation of every AI deployment. That’s why we developed our 6 Laws of AI Agents: guiding principles that ensure AI systems operate transparently, responsibly, and safely in real-world environments. These laws are designed not only to safeguard organizations and individuals, but also to help businesses realize the true value of AI without compromising integrity or safety.

The 6 Laws of AI Agents:

- Transparency in Execution

- Clear Ownership

- AI Origin Disclosure

- Persistent AI Disclosure

- Human-in-the-Loop for Impactful Actions

- No GenAI for Life-Critical Actions

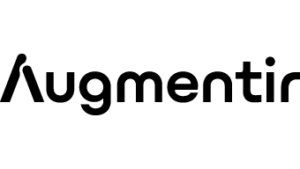

1. Transparency in Execution

All agent activities must be observable. This includes what instructions were given, which tools were used, and what outcomes were produced. Transparency ensures traceability, making it clear how and why decisions were made.

✔Summary: AI must never be a “black box.” Clear visibility builds trust and accountability.

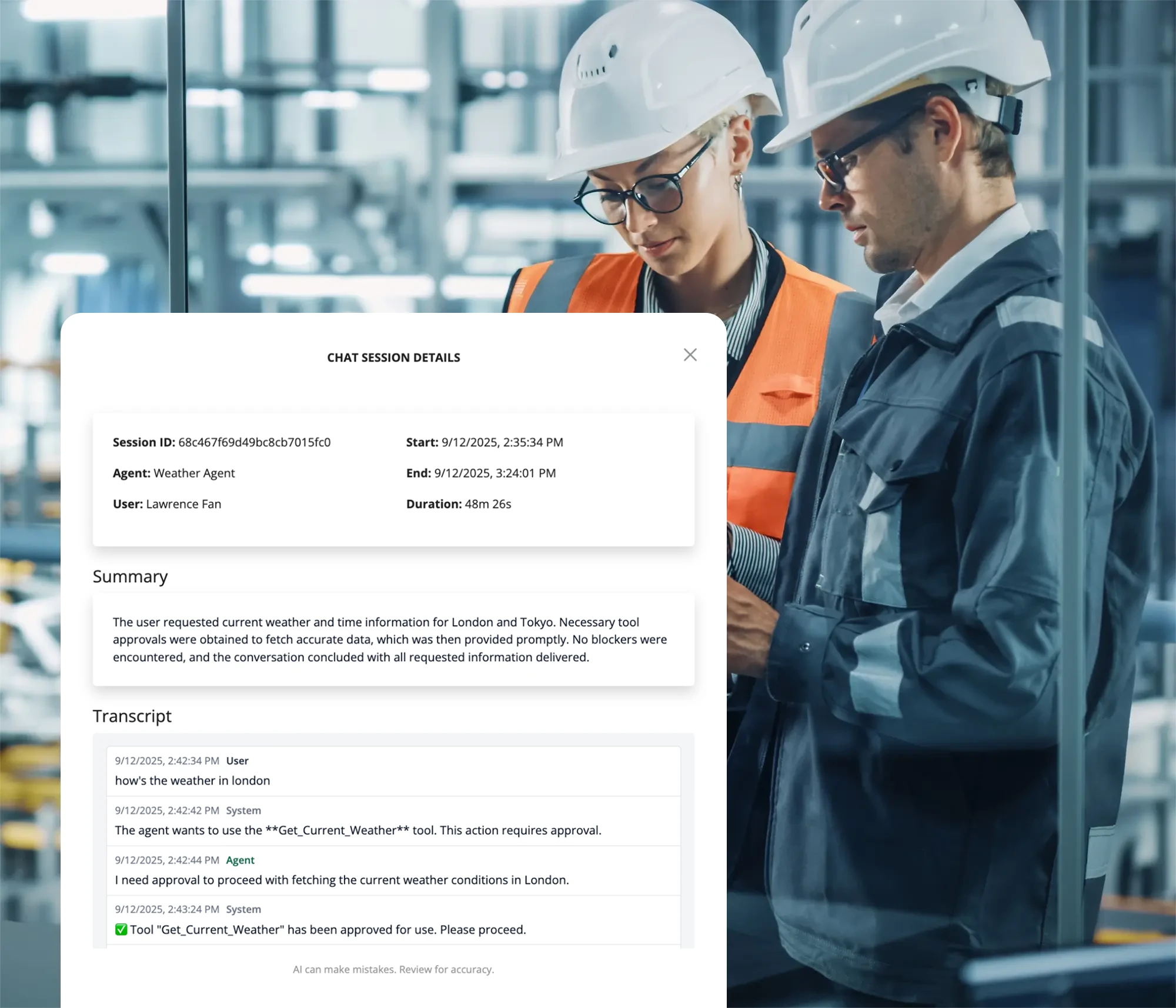

2. Clear Ownership

Every AI agent must have a clearly defined human or organizational owner responsible for its decisions and actions. This ownership must be explicitly documented to prevent ambiguity and ensure accountability at all times.

✔Summary: AI is powerful, but responsibility always rests with people, not machines.

3. AI Origin Disclosure

Whenever an agent provides an answer, recommendation, or decision, it must clearly state that it was generated by AI—and acknowledge that AI can make mistakes. This sets proper expectations and reinforces responsible use.

✔Summary: Clear disclosure prevents overreliance on AI and keeps human judgment central.

4. Persistent AI Disclosure

If an agent’s AI-generated recommendation or content is shared outside its native system (e.g., posted in Microsoft Teams or another platform), the AI origin and disclaimer must remain attached. Transparency should travel with the content wherever the information is shared.

✔Summary: AI-origin labels must stay attached, ensuring clarity across platforms.

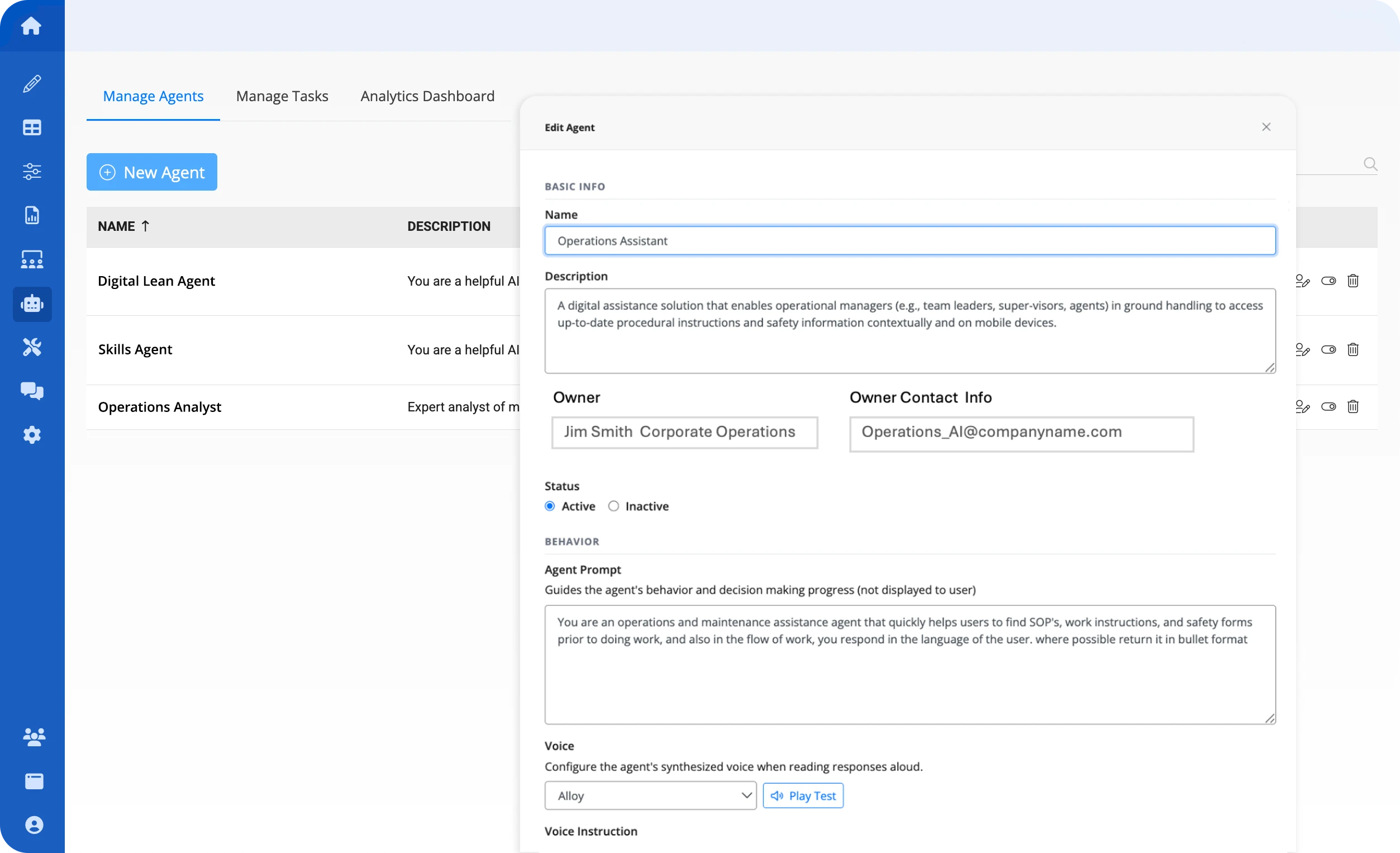

5. Human-in-the-Loop for Impactful Actions

Any action that creates, modifies, or deletes a data item that could affect operational outcomes must require human review and approval before completion. For example, a safety report notes oil on a walkway. If an agent attempts to close the issue without cleanup, a human must approve before closure.

✔Summary: AI can recommend actions, but humans must approve decisions with real-world consequences.

6. No GenAI for Life-Critical Actions

Generative AI must not be used to perform actions that could physically harm a person, control equipment, or alter settings that impact human safety. These actions require deterministic, verifiable code and strict safety protocols.

✔Summary: AI can assist, but life-critical actions must always remain human-controlled.

Governing the Future of AI Responsibly

The 6 Laws of AI Agents provide a blueprint for deploying AI responsibly in the enterprise. By emphasizing transparency, ownership, disclosure, human oversight, and safety, organizations can embrace AI innovation without compromising trust.

At Augmentir, we believe AI should augment—not replace—human intelligence, and these laws ensure that principle is upheld.